Overview

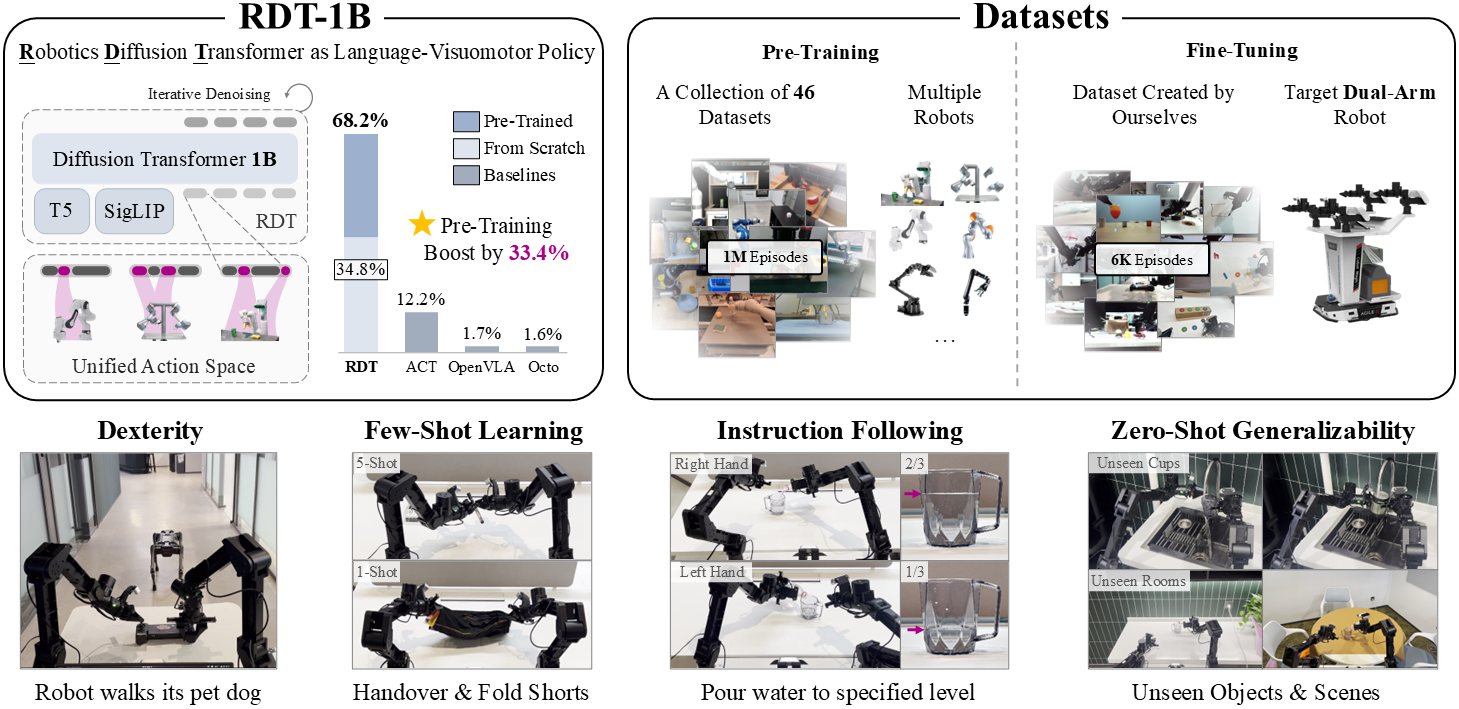

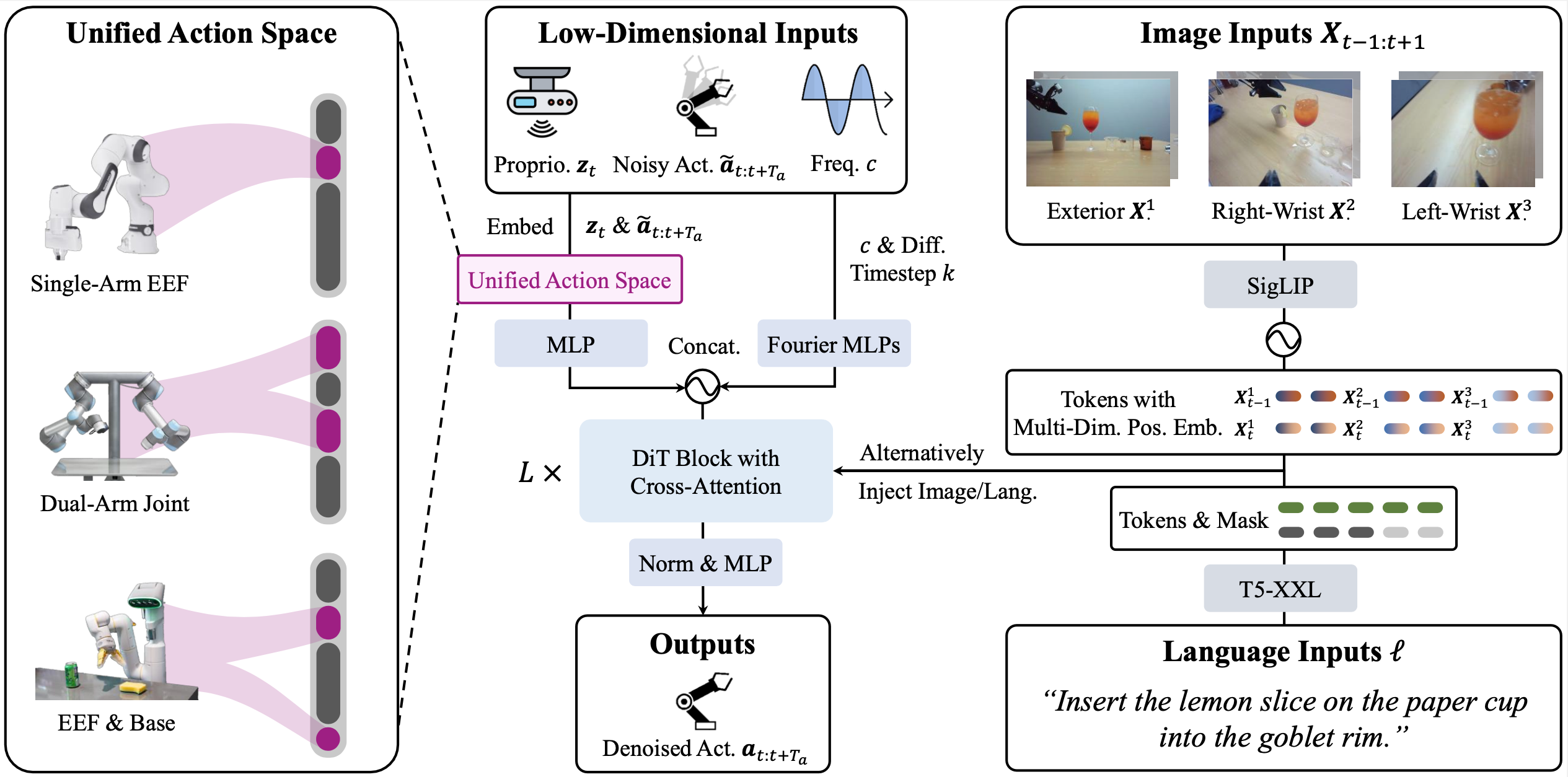

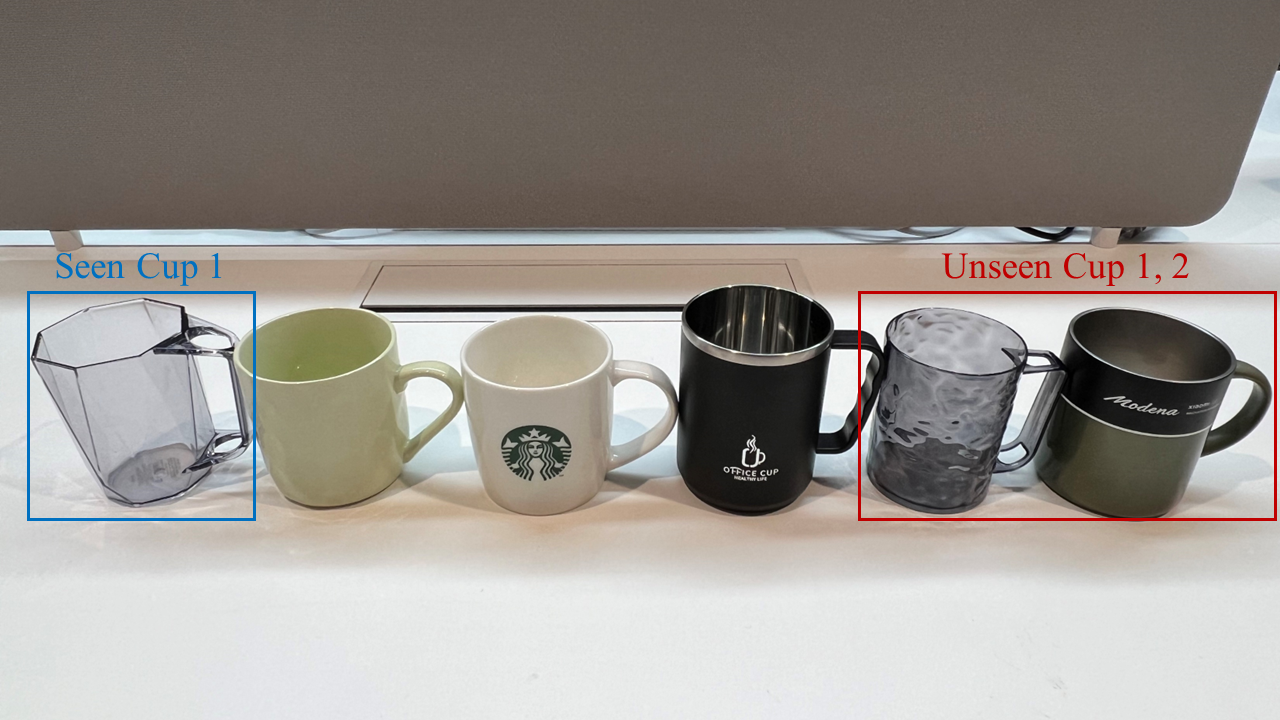

We present Robotics Diffusion Transformer with 1.2B parameters (RDT-1B), the largest diffusion-based foundation model for robotic manipulation. It is pre-trained on the largest multi-robot collection of 46 datasets with 1M+ episodes. To boost its bimanual capability, we have collected 6K+ episodes (one of the largest to date) on the ALOHA dual-arm robot for fine-tuning. It has set a new benchmark in terms of dexterity, zero-shot generalizability, and few-shot learning. It supports control of almost all modern manipulators (e.g., dual-arm, joints, EEFs, and even wheeled locomotion) and is ready for the community to fine-tune with their robots🚀!

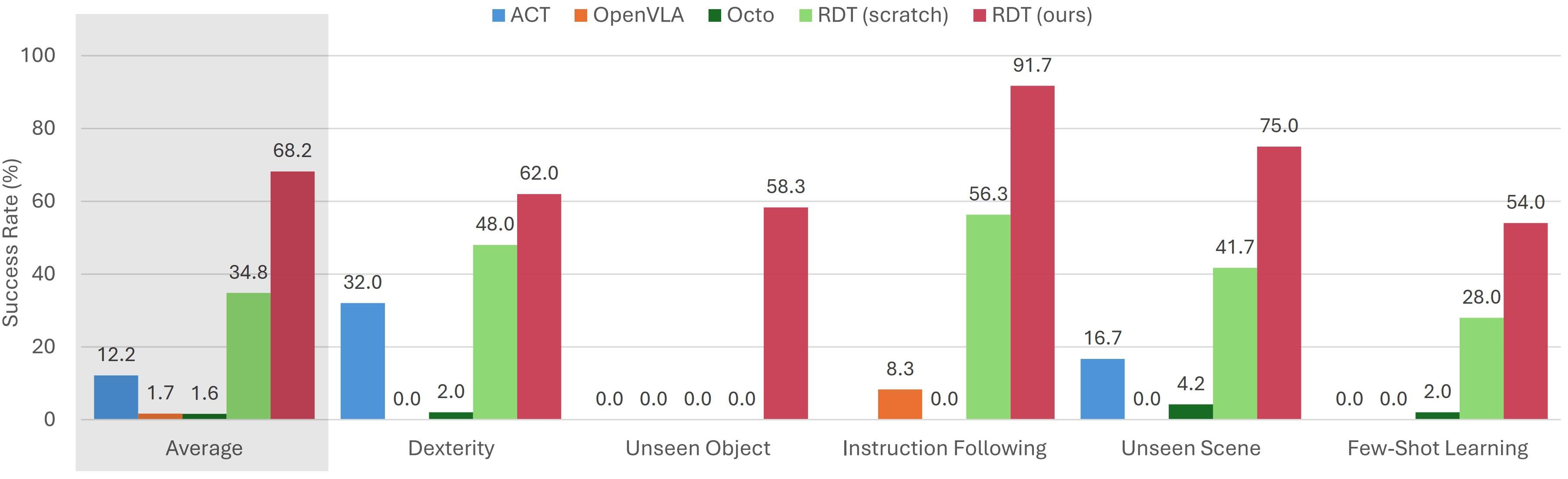

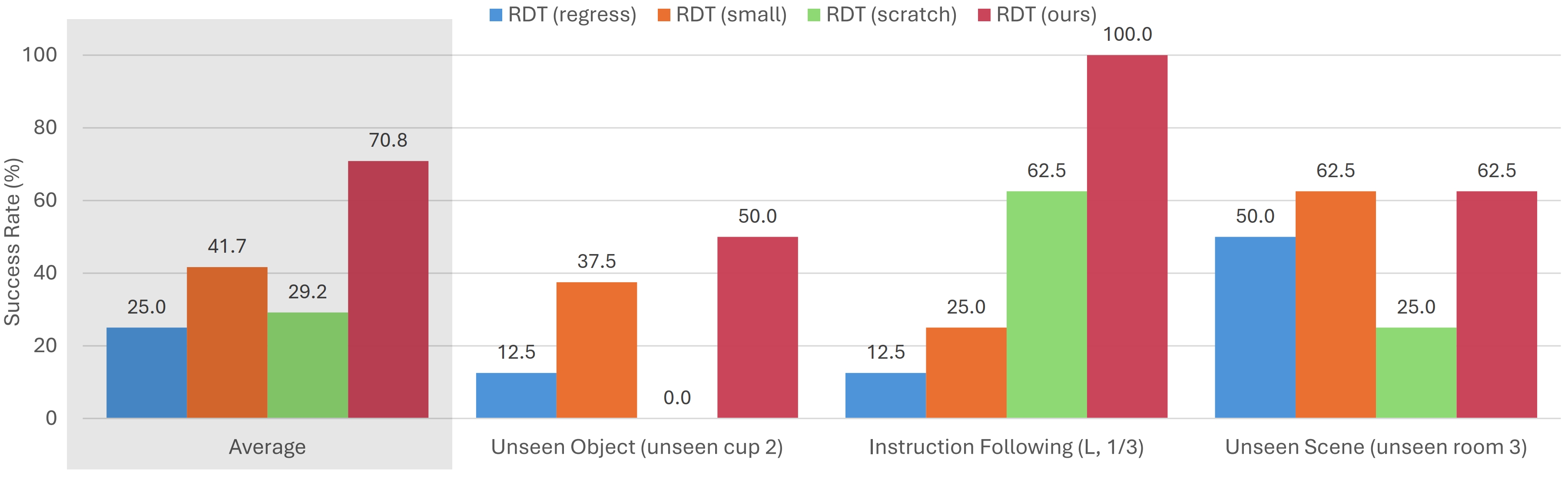

Results show that all components are crucial for the success of RDT.

Results show that all components are crucial for the success of RDT.